Emp has a different perspective on goal expectancies. After contrasting the nature of football with cricket and the NFL, completely irrelevantly because the games are not even close in style or format, he writes:

Football isn't remotely like that though. Teams aren't equally likely to score throughout the game since they can bolster their defence by squandering attacking chances, throw everyone forward and everything in between. I refuse to believe that all of those highly different situations have goal expectancies that are even remotely close to each other.

Secondly, which strategy the teams will use is highly dependant on the score-line at the time, and thus a static goal-expectancy cannot be the most accurate way of doing this. I know it can still be profitable, but that's very different from it being optimal or theoretically correct. This is looking at batting stats and calculating "run expectancy" would do much worse than an experienced cricket fan just watching and betting based on his understanding.

Also, your final para attacks a rather ragged straw-man. No one who was using a model based on those considerations would use such crude categorizations or stats. I saw you making fun of a system based on this which was hilariously crude, but that doesn't mean one can't classify teams in an intelligent manner. Alas, I don't want to divulge too many details of how my model works, so I won't go in depth, but I will say that performances of "Team Type X" vs "Team Type Y" are in my opinion a more accurate determinant of the result of a football match then any goal expectancy model.Clearly any one match can be approached by the two teams in a number of ways, and depending on the current score, may well change their in-play approach, but pre-game goal expectancies should be arrived at after looking at a number of factors. The fact that the closing lines are so accurate is a reflection of the ability for number crunchers to estimate very accurate expectancies.

Once a game goes in-play those estimates may or may not prove to be in line with expectations but Emp’s assertion that the observed and the expected aren’t “even remotely close to each other” is somewhat off target. The expectation may be for the home team to score 1.5 – and of course on occasion this may not even be close, e.g they score 6, but this long-term average will be close to 1.5. If it’s not, your model needs some work.

Games where the expected isn’t remotely close to the actual, are called outliers. West Bromwich Albion’s 5-5 draw with Manchester United on the last day of the 2012-13 season was an outlier. Occurring as it did on the last day of the season, with many teams final placing already set, such matches do tend to be played in a more open style, but even so a ten goal draw was quite unexpected.

United’s 1-6 home defeat to Manchester City a couple of seasons ago was an outlier. United hadn’t conceded six at home since 1930, but such results happen once in a “blue moon”, (it’s why we play the games), but the average over a large number of matches will be very close to expectations.

Emp does understand that once a game is in play, the current score affects the goal expectancies of the teams. Goals beget goals as the saying goes, meaning that, as John Haigh puts it: “the more goals that have already been scored up to the present time, the greater the average number of goals in the rest of the match”.

It’s also well known, and statistically proven that more goals are scored later in a game, “but these two points are second order factors: by and large, the simple model which assumes that goals come along at random at some average rate, and irrespective of the score, fits the data quite well”.

It’s important to understand that your estimates of goal expectancies are not always going to be correct. That would be an impossible task, but what is quite possible is to come up with reasonably close predictions.

I mentioned earlier that if your model isn’t close to the actual results being observed, you need to change it. I keep track of all my spreadsheet’s expectations, and make sure that (long-term) they are close to the actual results. Obviously you need a large amount of data before drawing any conclusions, but if your expectation is for a team to score 1.5 (on average) then after a few hundred observations, the average should be very close to 1.5. If it is 1.0 and your 1.4 expectation is actually 0.9 and your 1.3 is 0.8 etc., you are clearly expecting too much, and you need to look at your numbers. In this case perhaps increase your zero-inflation?

Anonymous commented that:

Indeed goal expectancy will change as the drama of the game unfolds but no model will ever be perfect. If such a model could be created, no one would be interested in football any more. Football isn’t meant to be predictable. It is the unpredictable, random, variable nature of the sport that makes it the world’s most popular game, but the inability to develop a perfect model isn’t a reason not to develop a good model, and not being perfect doesn’t make it flawed in the sense that it is invalid. Yes, it is ‘flawed’ in the sense that it isn’t perfect, it won’t predict every result correctly, but a model isn’t designed to predict one match correctly.

There are a lot of football matches played, and if your model – on average – can identify value, then by any measure, that is a success. You don’t give up on it just because in a game or two, there was an early away goal, or a red card or a header into the side-netting was erroneously awarded as a goal and your predictions were 'losers' as a result (even if the game is replayed as seems likely*). Of course these things will happen, but you accept them as part of the game, and sometimes these unexpected events work in your favour anyway.

I keep track of the number of times one of my XX Draw wins is taken away after the 80th minute (so far this season ) and while it’s annoying, in the long-term it is balanced out by late goals that generate a win from a previously losing position. It would be ridiculous to scrap a model that has been profitable over 1,803 games just because it didn’t predict that Valencia would score a 90’+4’ goal and ruin a perfect draw. Look at the big picture, accept that your model will never be perfect but go ahead and build something good anyway.

My XX Draws have already had results such as 0-7, 6-1 and 6-2 this season, which taken on their own would be somewhat embarrassing, but when you see these outlier results in the context of the total 1,803 matches, they are more easily accepted. And the 5-5 draw I mentioned earlier was actually an XX Draw selection, an example of how the unexpected can sometimes work for you.

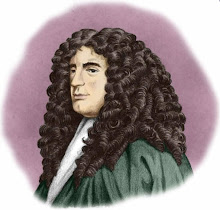

Expectation of a goal is dependent on the current score and all the variables that are known to impede and accelerate goal production such as a red card and a early away goal. The flaw in any model based on goal expectancy is that a) goal to shot on target ratio is not consistent game by game and b) there is not a predictive model that can predict the early away goal or the red card or lack of motivation or human error etc.Some good points on the surface, but Anonymous falls into the trap first noted poetically by Voltaire, who wrote:

Dans ses écrits, un sage Italien“The best is the enemy of the good”, although it is probably just a coincidence that Voltaire should write of ‘a wise Italian saying this in his writings’... Probably.

Dit que le mieux est l'ennemi du bien.

Indeed goal expectancy will change as the drama of the game unfolds but no model will ever be perfect. If such a model could be created, no one would be interested in football any more. Football isn’t meant to be predictable. It is the unpredictable, random, variable nature of the sport that makes it the world’s most popular game, but the inability to develop a perfect model isn’t a reason not to develop a good model, and not being perfect doesn’t make it flawed in the sense that it is invalid. Yes, it is ‘flawed’ in the sense that it isn’t perfect, it won’t predict every result correctly, but a model isn’t designed to predict one match correctly.

There are a lot of football matches played, and if your model – on average – can identify value, then by any measure, that is a success. You don’t give up on it just because in a game or two, there was an early away goal, or a red card or a header into the side-netting was erroneously awarded as a goal and your predictions were 'losers' as a result (even if the game is replayed as seems likely*). Of course these things will happen, but you accept them as part of the game, and sometimes these unexpected events work in your favour anyway.

I keep track of the number of times one of my XX Draw wins is taken away after the 80th minute (so far this season ) and while it’s annoying, in the long-term it is balanced out by late goals that generate a win from a previously losing position. It would be ridiculous to scrap a model that has been profitable over 1,803 games just because it didn’t predict that Valencia would score a 90’+4’ goal and ruin a perfect draw. Look at the big picture, accept that your model will never be perfect but go ahead and build something good anyway.

My XX Draws have already had results such as 0-7, 6-1 and 6-2 this season, which taken on their own would be somewhat embarrassing, but when you see these outlier results in the context of the total 1,803 matches, they are more easily accepted. And the 5-5 draw I mentioned earlier was actually an XX Draw selection, an example of how the unexpected can sometimes work for you.

"We're so embarrassed that the goal was given, but we can't be held responsible. Hoffenheim have spent such a lot of money on a nice stadium. Maybe next time they should buy some proper nets."

6 comments:

Your goal expectancy should already include the 'game state' information - just not with the correct weightings.

I would like to know where Emp gets the data for all of those highly different situations. As far as i am aware the only place to get goals data (and more importantly shots data) given the game state are companies like opta. Unless anybody else knows any good sources?

I'm not sure that there would be much improvement anyway, or should i say enough to justify the extra effort required.

On a seperate note - does anybody combine the XXdraws selections with any bookmaker promotions?

Think you may have missed the point Cassini.

Expectation of a goal is known to be dependent on the current score and there are variables in a game that can not be controlled that will accelerate and impede goal production.

It is the game state that will effect accuracy and shot on target production so if for example the game is 1-0 at half time to the home team you can look historically to see how this effects shot on target production to the home team and the away team in the second half and again should the game go 1-1 at any time you can repeat the process.

No model is perfect but the trap you are falling into is to not accept that in play models are far more precise then the static pre off models because you can react to the events such as a red card and an early away goal and 1-0 half time game state if you understand how they effect shot on target production and accuracy.

Sorry for the added comment, but I feel that I may not have made my point in the best way when giving my cricket analogy.

What I was trying to say with cricket is that playing styles depend heavily on situations. For instance, you can compute M.S. Dhoni's strike rate and averages, and contrast those with the bowlers economy rate and average. That particular calculation won't yield a reliable prediction of whether a team of 11 M.S. Dhoni's is likely to achieve a particular target. Why not? Because his averages and strike-rates are a cumulative of his performance over various highly different situations. Batsmen don't bat the same way with 25 overs and 5 wickets left as they would with 5 overs and 7 wickets left.

Similarly, and this is the point I was making, football teams don't play the same way when they are leading by two goals and when they are level with 15 minutes left. Stronger teams in particular, are disproportionately likely to score goals (at a rate above their goal expectancy) when the situation absolutely requires them to score.

There's no question at all that a highly accurate goal expectancy model (like Cassini's) will be very profitable, there's no way I was suggesting he should scrap it. What I was saying that an equally sophisticated model that measures relative strength of teams by analysing results(not based on these goal expectancy methods)could be equally effective.

"Similarly, and this is the point I was making, football teams don't play the same way when they are leading by two goals and when they are level with 15 minutes left. Stronger teams in particular, are disproportionately likely to score goals (at a rate above their goal expectancy) when the situation absolutely requires them to score. '

There is no data to confirm that the above is true .

2." What I was saying that an equally sophisticated model that measures relative strength of teams by analysing results(not based on these goal expectancy methods)could be equally effective."

I worked for a football trading house and for pre off they use POWER Models ie player rating and in running they use Shot Strength data which is manually keyed .

All models are based on relative strength of teams or goal expectancy.

What other method would there be?

What EMP confirms is the lack of knowledge of the effect of game state during the game for the simple reason that people do not look at the data.

There is data and there is interpretation.

Anonymous:

On Point Number 2: That's exactly what my post said, a model based on relative strength of teams (but not on goal expectancy) could be effective.

On Point Number 1: There may or may not be data to prove it, I certainly don't have that data, but that's not the same as data disproving it. I believe in it strongly enough to consider it a hypothesis worth testing through a system (which is working reasonably well, notwithstanding my manual error in missing out it's biggest winner while writing out my picks in this league).

Post a Comment